About me

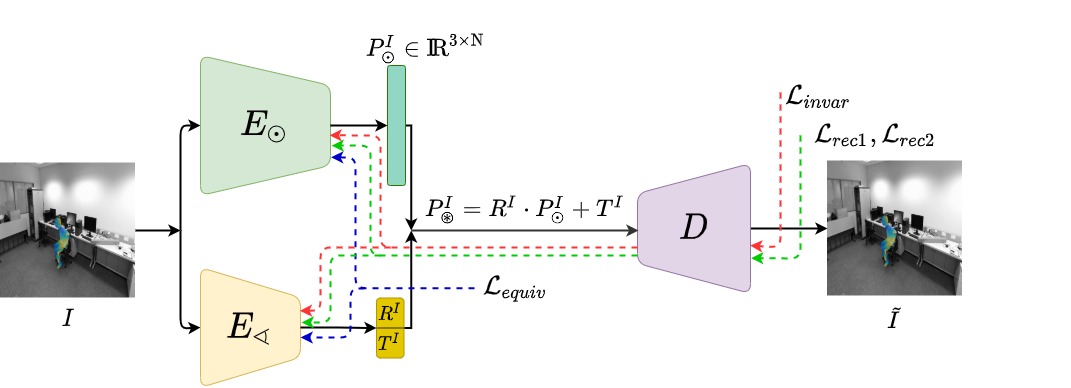

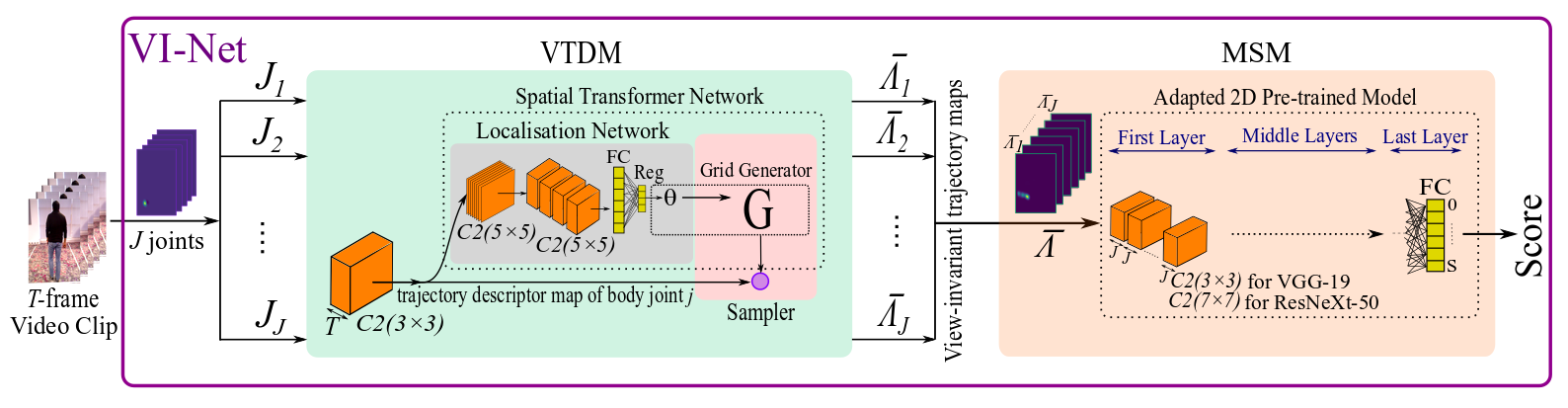

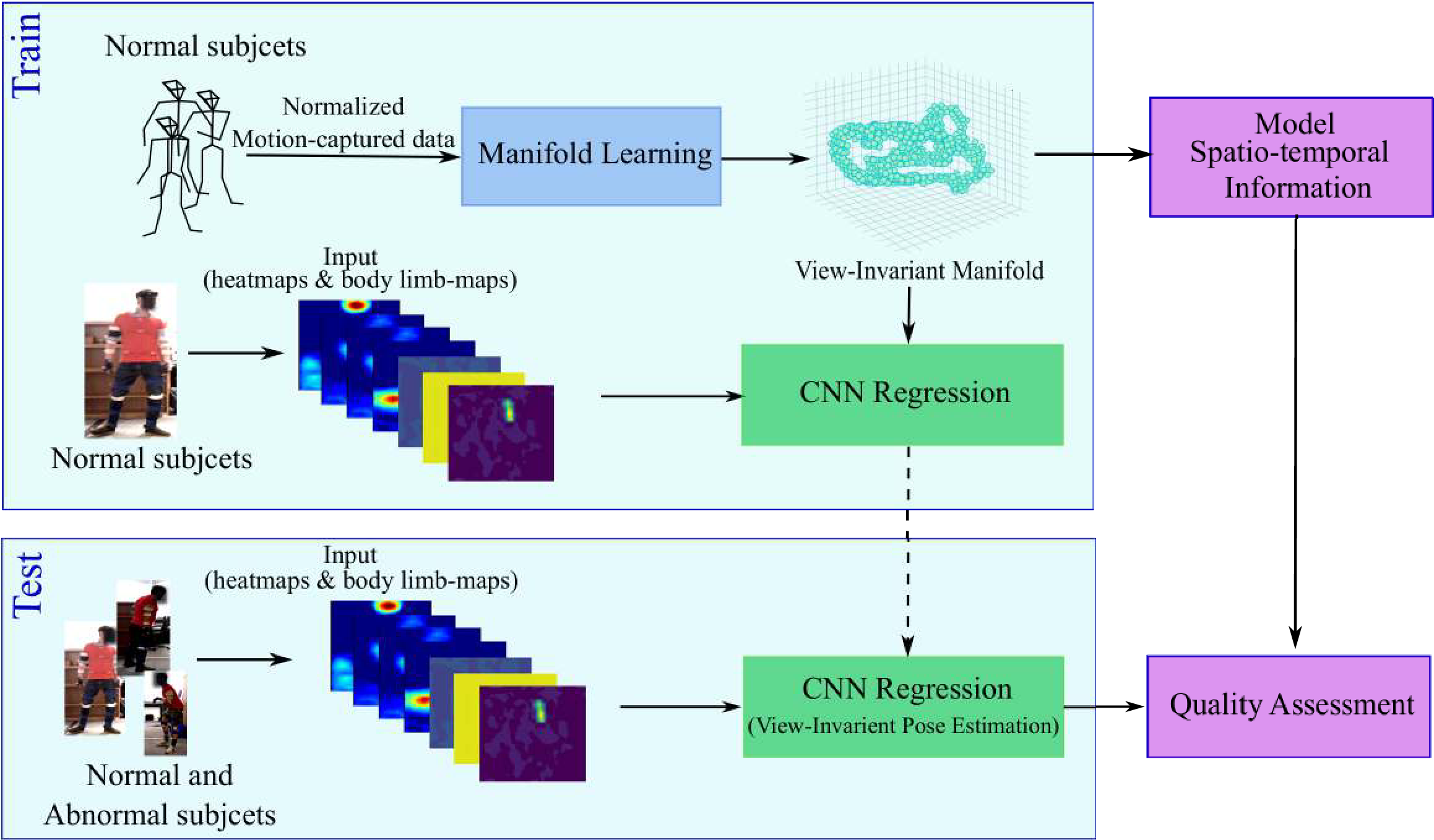

I am a final-stage Ph.D. student in Computer Vision and Deep Learning at Visual Information Laboratory, University of Bristol, Bristol, UK where I am working on 3D human posture analysis for video understanding, e.g. action recognition and human movemnet assessment in healthcare applications, under the supervision of Professor Majid Mirmehdi. Prior to that, I completed my master's degree in Artificial Intelligence at the Shahid Beheshti University (former: National University of Iran), Teheran, Iran. During my Master, I worked on object tracking methods.

I am passionate about understanding image and video with less/no human supervision, and unsupervised generation of synthetic data.